Interesting mini-debate, between Simon Wren-Lewis and Mark Thoma on one hand, and Paul Krugman on the other. The question is: What gave rise to the New Classical revolution in macro in the late 70s and early 80s?

I generally side with Krugman here, though I wasn't there to see it, so of course I don't know.

The term "New Classical" is usually used to refer to three things:

1. The Lucas islands model,

2. The methodological revolution of rational expectations, microfoundations, etc. that created DSGE, and

3. RBC models.

I don't know why these things are thrown together, except that Lucas worked on all of them. But anyway.

Wren-Lewis says that the stagflation of the 70s wasn't responsible for the New Classical revolution, because old-style "Keynesian" models could explain stagflation with some reasonable modifications. He says that people just thought the New Classical theories were more methodologically appealing, and that that's why the revolution happened.

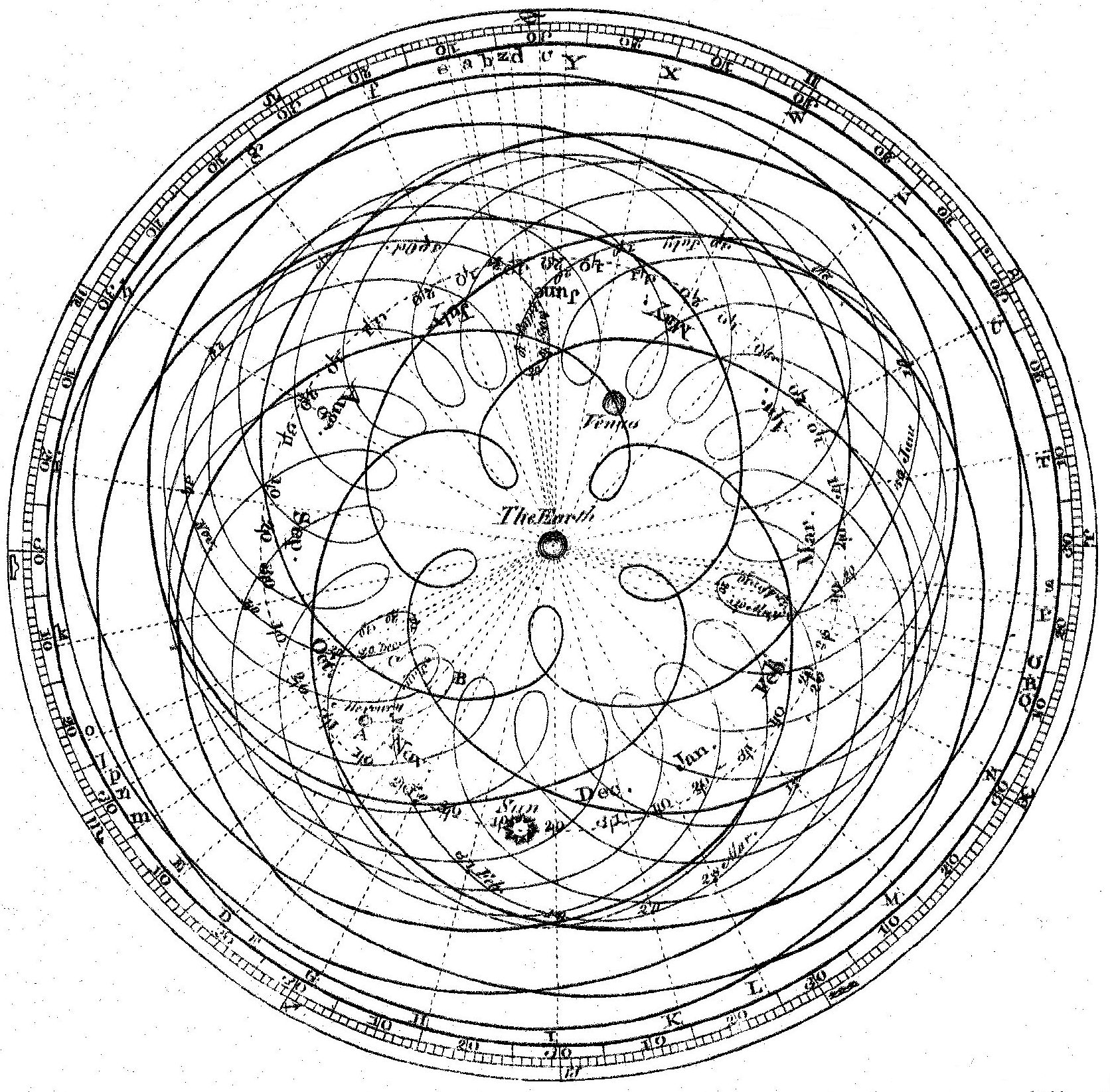

But that doesn't sound very credible to me. Sure, the old paradigm could explain the 70s, but it didn't predict it. You can always add some wrinkles after the fact to fit the last Big Thing that happened. When people saw the "Keynsian" economists (a label I'm using for the pre-Lucas aggregate-only modelers) adding what looked like epicycles, they probably did the sensible thing, and narrowed their eyes, and said "Wait sec, you guys are just tacking stuff on to cover up your mistake!" The 70s probably made aggregate-only macro seem like a degenerate research program.

The New Classicals, on the other hand, got the 70s right from the very beginning. That may have been a lucky coincidence - Ed Prescott is not a big believer in the power of monetary policy to affect growth, and he may have just built his models to reflect his prior. Also it probably helped that the New Classicals started their model-making with stagflation already well underway, and hence were not burdened by the historical baggage that the Keynesians had to bear. But for whatever reason, New Classicals started out predicting stagflation right off the bat, and I just cannot imagine that that fact didn't help their case substantially (though people also surely liked the methdological innovations).

Now this raises the question of how the 2008 crisis and Great Recession are going to affect the field. Generally these seem to have caused macro people to shift to financial-frictions DSGE models. The question is, will people lump those in with earlier DSGE models, and say that macro guys just added an epicycle after the fact (as the Keynesians seemed to in the 70s), and start to suspect that DSGE itself is a degenerate research program? Or will people view financial-frictions macro as a new thing, and give it credit for getting the crisis right (as New Classical got the credit for getting stagflation right), and think financial-frictions DSGE is the new paradigm?

I guess time will tell.

A lot of economics exists simply to tell big money what they want to hear. It was of course Friedman who told big money that they existed only to have big money and their only duty was to have big money. This is why so much economics, like politics, passes ordinary people by in the main.

ReplyDeleteChaos Theory is most relevant to us plebs. We do not experience equilibrium and rational actors all we encounter is constant flux and raving lunatics. bill40

I don't think Prof. Smith wants to admit this, but it's pretty obvious to a more dispassionate observer.

DeleteEven if you look at less ideologically useful fields such as English literature, the dictates of those who hold the purse strings will in some small ways determine where the field moves. For instance, a multi-millionaire loves American literature, so he establishes a chair in American lit at his alma mater. There's one more job for a prestigious professor in American lit--but is this because American lit is more important or more relevant than 18th century British literature? Of course not.

This is indeed pretty bloody obvious.

Delete"A lot of economics exists simply to tell big money what they want to hear. It was of course Friedman who told big money that they existed only to have big money and their only duty was to have big money."

Economists understand rational profit-maximizing behavior. When you see an economist promote a really suspicious and unlikely theory which is contrary to reality, and he makes a lot of money and gets a lot of accolades for doing so, you really ought to see this as a case of rational profit-maximizing behavior.

To put it another way, the "neoclassical" economists mostly sold out their intellectual integrity. Most people aren't rational profit-maximizers, but some people are -- the ones who sell out are.

Yes, whenever anyone says anything you think is wrong, they've probably been paid for it.

DeleteOf course this is paired up with 'chaos theory' mysticism, which (along with 'complexity') is the One Weird Trick for the engineering/CS crowd who bizarrely seem to think that the one thing missing from modern macro is complication.

Obviously, my chosen worldview is a Strange Attractor! ;-)

DeleteA lot of economics exists simply to tell big money what they want to hear.

DeleteBut not all of it. Confusing the two serves no purpose.

Oh my. I think I am going to get shrill.

ReplyDeleteI do not believe that Ptolemaic astronomers added epicycles. I don't think there is any ligitimate evidence that the Ptolemaic model was changed after Ptolemy (except for refined parameter estimates such as the year is closer to 365.2425 days than 365.25). It is very widely agreed that they did, but this belief is not based on any primary sources as far as I know.

I do not believe that any prominent Keynesian ever presented a model which was inconsistent with stagflation. It is widely believed that at some point Keynesians believed in a stable Phillips curve. Again, this belief is not based on any primary sources.

Here I disagree with Wren-Lewis who says all that is needed for stagflation is an accelrationist Phillips curve. This is not needed. a Phillips curve which shifts up 0.5 times lagged inflation is enough. This corresponds to a downward sloping long run Phillips curve. It is consistent with the data from the 1970s. My estimate of that parameter using data through 1980q1 is

. reg ldwinf infcpi linfcpi unem if qtr<1980

ldwinf | Coef. Std. Err. t

infcpi | .4888773 .0642374 7.61

linfcpi | .0698199 .0748645 0.93

unem | -.1724548 .1291306 -1.34

_cons | .0518619 .0063418 8.18

This is roughly similar to estimates reported by 1971 (though not with my few variables from FRED).

The constoversy between Friedman and the Paleo Keynesians was whether the sum of coefficients on lagged inflation is one or less. Data from the 1970s did not answer this question.

The standard definition of "stagflation" is a combination of high inflation and high unemployment. This can occur in a 1960s era Keynesian model. Only after the fact did people (notably including Friedman who knew otherwise) come up with the idea that Paleo Keynesians didn't model expected inflation or assume that the Phillips curve shifts up with expected inflation.

On who predicted what when, you really have to read working papers by James Forder http://www.economics.ox.ac.uk/Academic/james-forder . He is very convincing.

I think it is also useful to see how the Old Keynesians responded to Lucas's supply function paper. I strongly recommend reading as a statement of the old Keynesian orthodoxy http://cowles.econ.yale.edu/P/cd/d03a/d0315.pdf. I note that Tobin agreed that Friedman was definitely right that eventually expected inflation would rise one for one with a permanent increase in inflation -- it was agreed in 1971 that this must be true, although the US data from the 70s which allegedly proved Friedman right and someone else wrong doesn't prove that it is true.

I do not believe that Ptolemaic astronomers added epicycles. I don't think there is any ligitimate evidence that the Ptolemaic model was changed after Ptolemy (except for refined parameter estimates such as the year is closer to 365.2425 days than 365.25). It is very widely agreed that they did, but this belief is not based on any primary sources as far as I know.

DeleteI didn't mention Ptolemy, did I??

In the 60s, Paul Samuelson was pretty active in writing op-eds and papers about the phillips curve advocating for more inflation as a way to reduce unemployment. In none of them did he mention that the Phillips curve might not be structural, which isn't quite the same thing as saying that he believed it was structural, but that is probably the message most of his readers would have gotten.

DeleteYou are sort of right about Ptolemaic epicycles. They were first added to the Ptolemaic model by...Ptolemy, in the 3rd century BC. That said, Ptolemy did not create the whole model from scratch--there was a preexisting line of thought about astronomy to which Ptolemy added epicycles to make the aesthetic geometry fit with messy observations. So I'd say there's some truth to the narrative about epicycles having been added to the old model to make it workable.

Interesting passage from this paper: http://www.fsb.muohio.edu/fsb/ecopapers/docs/hallte-2010-08-paper.pdf

Delete"In the interview, Robert Solow said “Paul Samuelson asked me when we were looking at these diagrams (of inflation and unemployment) for the first time, ‘Does that look like a reversible relation to you?’ What he meant was ‘Do you really think the economy can move back and forth along a curve like that?’ And I answered ‘Yeah I’m inclined to believe it,’ and Paul said ‘Me too’” (Leeson, 1997a, 145).8"

Ptolemy added one epicycle. There are anecdotal accounts that some medieval European astronomers tried creating some many-epicycle models:

Deletehttp://en.wikipedia.org/wiki/Deferent_and_epicycle#Epicycles

But since academic publishing was sketchy in those days, it's hard to know just how complex these got.

In any case, "epicycles" has just become slang for "free parameters", and as such I think it is a coherent enough metaphor to use in public discussion.

Noah cleared up most of it and the Wikipedia article is a good start but I would say Ptolemy codified an epicyle as proposed by Appolonius (who also coined the words parabola and hyperbola). The original epicycle was a way to model the different apparent motion (of the moon) through orbit caused by the orbital speed differences a body has at apogee and perigee. It is actually an observational approximation of Kepler's second law.

DeleteAs shown in the article referenced by the Aquinus quote epicycles were not modifications of some underlying principle but a substitute for a unifying theory of orbital motion in the face of observation.

Epicycles are more interesting as a description and quantification of how far a model based on theory differs from reality. The objection is to the idea that they were included in the Ptolemaic model as some underlying basis of the model and not just a useful tool to adjust the model to make better predictions.

The Antikethera Mechanism has 2 epicycles incorporated by some genius work. The epicycle was a necessary transformation (literally a specialized Fourier transform) to make the basic Ptolemaic model useful for functional predictions of the locations of the planets.

I think is this post is almost spot on.

ReplyDeleteAlways need to get a jab in on Prescott? Using a recent quote to try and infer "priors" from decades ago is a stretch. It couldn't be that he looked at the situation and saw it differently than you and I? No need to make baseless remarks about priors that apparently come from the sky.

This sentence -- "It couldn't be that he looked at the situation and saw it differently than you and I." -- is literally what the word "prior" means. Literally.

DeleteThis sentence -- "It couldn't be that he looked at the situation and saw it differently than you and I." -- is literally what the word "prior" means. Literally.

DeleteBuahahahahaha yup!

The reason Neo-classicals dominate is because it's easier to ASSUME human behavior rather than actually STUDY human behavior. This is reflected in the stubborn idea of rational expectation theory. In my studies of aggregate human behavior (a.k.a. Sociology) there is nothing rational about human behavior. When people make economic decisions, they usually do so out of fear (fear of eviction, fear of having utilities shut off, etc).

ReplyDeleteThat's not to say there's nothing to be learned from the Neos. They were certainly right on with stagflation. The only thing we don't have now is inflation. We have high U6 and low growth. There's a classical model in there somewhere.

But assuming that all humans make decisions based on some sort of rationality is simply intellectual laziness.

You have no idea what 'rational' in an economic context means. How can you think yourself knowledgeable enough to comment on the validity of economics without knowing this one thing?

DeleteDSGE is already considered a degenerate research program. So, well, we know that now.

ReplyDeleteFinancial frictions are important and will continue to be considered important, but you need to take them out of the useless DSGE paradigm.

Thanks for the link to Lakatos, that's a framework I had not been exposed to.

ReplyDeleteKrugman's post suggests that he regards degeneracy as a virtue of the Keynesian research program over less degenerate programs. Perhaps this is what Keynes meant when he said, "When the facts change, I change my mind..."

This is something that most technical people in other fields recoil from when they are exposed to mathematical social sciences like macro. I.e. the self-deluding practice of developing a highly technical-sounding theory of unmeasurables and weak correlations, rationalizing it through plausible inductive reasoning ("not even wrong"), and adding more unobservable parameters as you go along in order to explain the recent past. Noah described the best defense as "it's the best we've got".

As always, the armchair philosophers of science and armchair economists come out of the woodwork to declare how obvious it was to them that DSGE methods have always been wrong. So knowledgeable, so brave.

ReplyDeleteThere were a lot of people who did not like DSGE for all sorts of reasons from the very beginning. That they have spectacularly failed the ultimate prediction test is only a further reason for people to say enough is enough.

DeleteModels with large numbers of adjustable parameters can memorize small sets of correlated observations. The old Keynesian models didn't allow for stagflation because stagflation was not present to any noticeable extent in the training data. Old Keynesians point out that heaping more adjustable parameters onto these models, and then re-fitting them to data featuring stagflation, yields predictions of stagflation. In other news, grass is green.

ReplyDeleteAt the other extreme, models with tremendously detailed structure, so long as the structure is sufficiently complex, can also memorize small sets of correlated observations. The DSGE models failed to predict the financial crisis because financial crises of the most recent variety were not present to any noticeable extent in the training data. Financial-friction new Keynesians point out that adding still more structure to these models, and then re-fitting them to data featuring financial crises, yields predictions of financial crisis. In other news, the sky is blue.

There exists an alternative that is well-studied outside of macroeconomics, but seems to be poorly understood within macroeconomics. It provides techniques for using data to learn not only the parameter values of a pre-specified structure, but also to learn the structure itself. Most importantly, it involves using the data to constrain the amount of structure and number of adjustable parameters to a level licensed by the richness of the data. It is called nonparametric statistics.

The more data we have, and the less correlated it is, the more structure and more adjustable parameters we can utilize without simply chasing noise. If it turns out that the theories macroeconomists contemplate are simply too structured and/or too flexible to learn from the available time series, then those theories will simply have to wait until better data is available. Until then, the corresponding models will only be able to function as just-so stories, rather than progressively improving scientific explanations.

Ram, what's your opinion of this model?

Deletehttp://informationtransfereconomics.blogspot.com/2014/06/the-information-transfer-model.html

I'd probably have to spend more time than I have at the moment to say something constructive about this. Seems interesting on first glance. One thing you might want to look into is some measure of model complexity. The general problem I'm noting about macroeconomic models is that they're too complex relative to the richness of the available data. If you can calculate some bounds on the predictive risk of the model, given the available data, you might get some insight into whether this model is even amenable to statistical learning. If it turns out that the in-sample fit underestimates the predictive risk by anywhere from 0 to infinity, then we're not going to be able to say anything interesting about the model with today's data.

DeleteThis is not to say, however, that clever people shouldn't continue to build complex models like yours. The point is that if we want increasing data to guide our scientific efforts, we need to limit ourselves to models of a complexity that can be accommodated by the available data. Otherwise, we're just describing possible worlds our distance from which we have no ability to gauge.

Ram, thanks for taking a look and your reply. I should make it clear though that the model I linked to is not mine: Jason Smith created it.

DeleteHere's the short "hard core" of the theory:

Deletehttp://informationtransfereconomics.blogspot.com/2014/06/hard-core-information-transfer-economics.html

This is sad, really.

ReplyDeleteThe likes of Hawtrey, Fisher and Cassel got it right in the 20s - and all the new stuff since then has been idiotic.

Keynesians with their zero-lower bound illusion. As if the central bank can't choose another instrument.

RBC and their denial of obvious facts like sticky wages.

And now, post-Keynesian with they fixation on finance - when it importance is just an accidental side-effect of using interest rates as a policy instrument.

No wonder people think macroeconomics is useless. Because, as the field stands today, is actually is.

I was around (and building a Keynesian macro model) in the 1970's, so may have something useful to say on this. I think it is important to remember that the pre-1970 data on which such models were estimated came from a fixed exchange rate regime. This meant that (particularly for the UK, where much of the early work on the Phillips curve was done) it was perfectly sensible to treat price expectations as anchored rather than responding to domestic policy changes. Any attempt to operate the economy at 'too high' a level of demand would lead to current account deficits rather than accelerating inflation. The same was to some extent true of the US, but the US worried less about such deficits because the dollar was the key currency.

ReplyDeleteAfter the breakdown of Bretton Woods things obviously changed - my recollection is that most Keynesians moved fairly quickly to accepting the accelerationist argument (even if they argued that in the short-run there would be lags because of long-term wage contracts). There was also a great deal of valuable research on time series econometrics, which suggested that many of the estimated relationships in 70's era models were unsatisfactory for purely statistical reasons (mainly nonstationarity of the underlying series): fixing these problems would lead to much more stable models. However it was easy for critics to present the predictive failure of the models as the result of a lack of rigorous microfoundations, and very tempting (largely for ideological reasons) to put forward an alternative view in which macroeconomic policy is either ineffective or damaging. If you accept this alternative view, precise structural parameter estimates based on historical data are unnecessary, since you aren't going to undertake any policy interventions anyway. So you don't need to estimate your RBC (or DSGE) model, and the fact that it may not be consistent with observed data becomes irrelevant: if pressed you can argue (as Prescott famously did) that 'Theory is ahead of Measurement'. I think you have to link this change in the criteria used to evaluate models to the simultaneous change in the perceived scope of macroeconomic policy, and in the underlying aims of macroeconomics.

Lars Syll vs P.Krugman on methodology continues..

ReplyDeleteLars P Syll-

"Paul Krugman — a case of dangerous neglect of methodological reflection"

http://larspsyll.wordpress.com/2014/06/29/paul-krugman-a-case-of-dangerous-neglect-of-methodological-reflection/